In the fascinating landscape of artificial intelligence (AI), one area that has been gaining significant traction is audio AI. It’s an innovative field that merges the power of AI with sound, opening up myriad possibilities and applications.

But how exactly does it work? More importantly, how can you leverage it to create your own AI sounds?

Audio AI uses advanced algorithms and machine learning techniques to analyse and interpret audio signals. It processes the audio input, converts it into text, and can recognise and understand spoken words, phrases, and commands.

This technology uses deep neural networks and training data to improve accuracy and adapt to different accents and languages.

Audio AI has various applications, including virtual assistants, transcription services, voice-controlled devices, and more.

I will explain how audio AI applications work in this article, sharing my experiences with the technology.

What Is Audio AI?

Audio AI, often referred to as audio artificial intelligence or sound recognition AI, is an advanced technology that allows machines to understand and interpret audio signals.

Audio AI can decode these signals into meaningful information by leveraging machine learning algorithms and deep learning techniques.

The technology can recognise a wide range of sounds, from human speech to environmental sounds. It can deliver myriad valuable applications such as voice assistants, transcription services, sound detection for safety, and many more.

This adaptability and versatility of Audio AI have seen its adoption across numerous sectors, transforming the way we interact with technology.

How Does Audio AI Work?

At its core, Audio AI works through a process known as feature extraction. This involves converting raw audio input into a set of features or data points that can be analysed.

The raw audio is initially broken down into smaller, more manageable segments, typically of a few milliseconds each. This process, often called windowing, is essential to capture the rapid fluctuations in sound waves. Then, the algorithm extracts valuable information from these audio frames, transforming them into a suitable format for analysis.

The next step involves feeding these extracted features into a machine-learning model. This model has been trained on a vast amount of audio data, learning to recognise patterns and correlations between the features and the corresponding sound events. When presented with new audio input, the model uses what it has learned to predict what sounds are present.

Finally, the output of the AI model is post-processed. This could involve cleaning up the output, combining predictions over time to arrive at a final decision, or further analysis depending on the specific application of the Audio AI.

In my endeavours, training the machine learning model is one of the most intricate and rewarding stages. Here, the magic happens, where the model learns from the data and makes accurate predictions. The process requires patience and careful tuning, but the results can be remarkable.

What Data Does Audio AI Use?

Audio AI leverages a diverse range of data, often called training data, to learn and make predictions. This data typically consists of an extensive collection of audio files, each associated with a label that describes the file’s content.

For example, the data might include recordings of spoken words or phrases along with the corresponding text in speech recognition.

In sound recognition, the data might consist of various environmental sounds, like a dog barking or a car alarm, each labelled accordingly.

The quality and variety of this training data are crucial factors in the performance of the AI. The more varied and representative the data, the better the AI can understand and interpret new, unseen audio signals.

In my experience, sourcing and preparing this data can be a time-consuming but essential part of creating a functional audio AI system.

How Can I Make My Own AI Sounds?

Creating your own AI sounds can be an exciting and innovative undertaking. You can design unique and personalised soundscapes using the right tools and techniques.

If you want a fast method to create your own sounds, use ready-made software to help you create your own AI voice and sounds.

For example, here is a great explainer video on generating your own voice with text-to-speech.

A simple example of how anyone can create their own “text-to-speech” app is to use the following code in Notepad.

- Open up Notepad

- Copy and paste the following code.

Dim Message, Speak

Message=InputBox("Enter text","Speak")

Set Speak=CreateObject("sapi.spvoice")

Speak.Speak Message- Click on File Menu, Save As

- Select “All Types” and save the file as AudioAI.vbs or “*.vbs”.

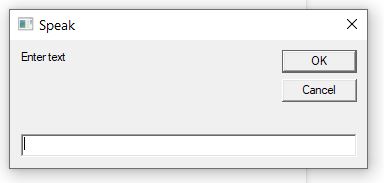

- Double-click on the saved file, and a window that looks like the image below will open. You can then write the text that your computer will speak in this text box.

If you want to create your own AI sounds from scratch, using your programming skills, then this is possible, but it is far more involved.

Creating AI sounds from scratch is a complex process that requires some understanding of machine learning and sound engineering, but it is achievable with time and perseverance. Here are the basic steps you will need to follow:

- Collecting Training Data: The first step is to gather a large and diverse dataset of sound samples. Depending on what you want your AI to learn, these could be anything from human speech to environmental noises. You should label each audio sample with the type of sound it is.

- Preprocessing the Data: Before the data can be used, it must be preprocessed. This involves converting the audio into a format that can be used by the machine learning algorithm, such as a spectrogram or MFCCs (Mel Frequency Cepstral Coefficients).

- Training the Model: Next, you feed your preprocessed data into a machine-learning model. There are many models to choose from, but deep learning models like convolutional neural networks (CNNs) or recurrent neural networks (RNNs) are often used for sound recognition tasks. This is where the model learns to identify different types of sounds.

- Testing the Model: Once the model has been trained, it’s time to test it. This involves feeding it new audio data it hasn’t seen before and seeing how accurately it can identify the sounds.

- Tuning and Improving: Based on the results of your tests, you may need to tune your model or gather more training data to improve its accuracy. This is an iterative process that requires patience and fine-tuning.

- Generating Sounds: After your model is trained and tuned, you can use it to generate new sounds. This can be done by sampling from the model’s learned distribution or by guiding the model to generate specific types of sounds.

- Post-Processing: Finally, the generated sounds may need some post-processing to make them sound more natural or fit into a specific context. This could involve anything from adjusting the volume or pitch to adding reverb or other effects.

Remember, this complex process requires a fair amount of coding and understanding of both machine learning and audio processing. Don’t be discouraged if you don’t get it right the first time, as it’s a field where even professionals are constantly learning and experimenting.

Do I Have To Know How To Code To Generate AI Sounds?

While having a grasp of coding can give you more control and customisation over the AI sounds you create, it’s not strictly necessary to know how to code to generate AI sounds.

Various software tools and applications available today provide user-friendly interfaces and pre-built sound libraries, enabling individuals without programming knowledge to create and modify AI sounds.

These software tools often offer tutorials and wizards to guide you. However, if you wish to delve deeper into AI sound generation, learning to code can open up a wider range of possibilities, allowing for more detailed customisation and fine-tuning of your sounds.

In my own experience, a bit of both—leveraging software applications for quick results and applying coding for enhanced customisation—proved invaluable.

Final Thoughts

Audio AI’s realm is vast and fascinating, offering an exciting blend of technology and creativity. Its applications are diverse, from speech recognition to sound generation, and creating your own AI sounds can be an incredibly rewarding experience.

Regardless of your level of coding proficiency, there are accessible tools and pre-built libraries that can help you embark on this journey. However, delving into code can unlock more advanced customisation, providing more refined control over your AI sounds.

As with any journey, there may be challenges, but the potential rewards—personal satisfaction and the creation of unique soundscapes—are immense.

As I have found in my own experiences, patience, perseverance, and a keen curiosity are crucial to exploring and succeeding in this intriguing field of AI.